2025-気候変動コミュニケーション研究

東京大学未来ビジョンセンターの気候変動コミュニケーション研究ユニットに参加をしています。

2023-2024 AI & Climate ELSI-RRI / AI・気候のELSI-RRI

This project develops an ELSI/RRI sheet for climate engineering scenarios. At the same time, we also conduct research on the reliability of AI and science(PI Yokoyama).It is linked to the STS Barometer 1st Wave.

気候工学のシナリオでELSI/RRIシートを開発するプロジェクトです(研究代表・横山広美)。同時にAIの信頼、科学の信頼についても研究を進めました。STSバロメーター第1ウェーブと連動しています。

2025.3 Wang, S., Wang, T., Yokoyama, H.M. et al. Beyond a single pole: exploring the nuanced coexistence of scientific elitism and populism in China. Humanit Soc Sci Commun 12, 353 (2025). https://doi.org/10.1057/s41599-025-04685-3

Letters

This was the time when generative AI was released and there was a lot of discussion that was needed, so we wrote a lot of letters.

生成AIがリリースされた時期であり、多くの議論が必要なため私たちはレターを多く書きました。

2024.5 Wang, S., Kinoshita, S., & Yokoyama, H. M. (2024). Write your paper on the motherland? Accountability in Research, 1–3. https://doi.org/10.1080/08989621.2024.2347398

2024.4 Mayo Clinic Proceedings: Digital Health (letter) Cautions and Considerations in Artificial Intelligence Implementation for Child Abuse: Lessons from Japan, Kinoshita,Yokoyama,Kishimoto

2024.3 Nature review Physics (view point) Generative AI and science communication in the physical sciences

2023.6 Nature (Correspondence) Shotaro Kinoshita & Hiromi Yokoyama, Large language model is a flagship for Japan, Nature 619, 252 (2023), doi: https://doi.org/10.1038/d41586-023-02230-3

2023.5 Nikkei newspaper / 日経新聞(経済教室)AI倫理の指針「AI8原則」の順守を 人工知能と社会 横山広美

2022-2023 AI Diversity ELSI / AIダイバーシティ倫理

Beyond AI Project

2024.6 Ikkatai, Y., Itatsu, Y., Hartwig, T. et al. The relationship between the attitudes of the use of AI and diversity awareness: comparisons between Japan, the US, Germany, and South Korea. AI & Soc (2024).

We are collaborating ELSI research on face recognition, mainly at the Technical University of Munich. /ミュンヘン工科大学を中心に、顔認証についてのELSI研究を行っています。

2024.6Attitudes Toward Facial Analysis AI: A Cross-National Study Comparing Argentina, Kenya, Japan, and the USA | Proceedings of the 2024 ACM Conference on Fairness, Accountability, and Transparency

2020-2022 Score ELSI / 科学技術の倫理を測る

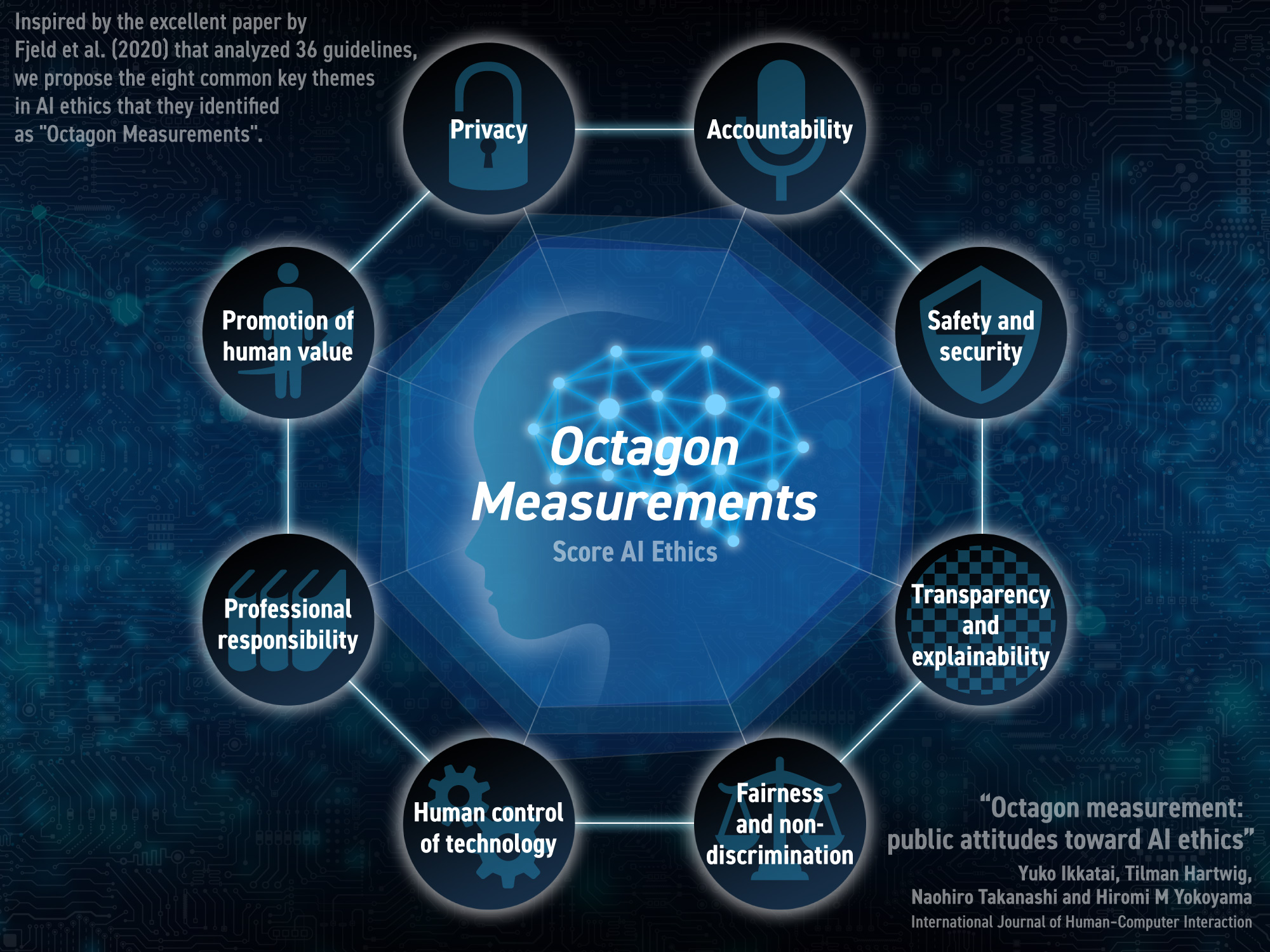

The ELSI project (2020.1-2022.12) supported by SECOM Science and technology Foundation is to develop a simple scale to measure the ELSI of advanced science and technology, such as AI and genome editing, for scientists and engineers in R&D.

セコム科学技術振興財団 特定領域研究助成 ELSI分野 「AI、ゲノム編集等の先進科学技術における科学技術倫理指標の構築」は、2020.1に始まりました。ELSIとは、科学技術の倫理的・法的・社会的課題(Ethical, Legal and Social Issues)を指します。本プロジェクトはAIやゲノム編集等の先端的な科学技術のELSIを、研究開発現場の科学技術者が簡易に測定できるための尺度開発を目標にしています。

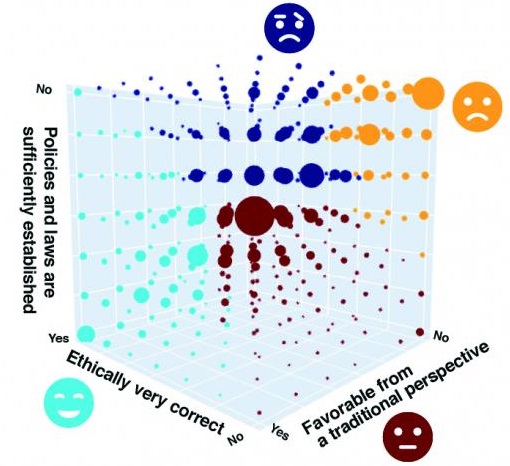

2022.9 Ikkatai, Y., Hartwig, T., Takanashi, N. et al. Segmentation of ethics, legal, and social issues (ELSI) related to AI in Japan, the United States, and Germany. AI Ethics (2022). https://doi.org/10.1007/s43681-022-00207-y(press release, プレスリリース)

2022.1 Hartwig, T., Ikkatai, Y., Takanashi, N. & Yokoyama, H.M. (2022). 'Artificial intelligence ELSI score for science and technology: a comparison between Japan and the US'. AI & Soc. https://doi.org/10.1007/s00146-021-01323-9

2022.1 Y.Ikkatai, T.Hartwig, N.Takanashi & H.M. Yokoyama (2022). 'Octagon Measurement: Public Attitudes toward AI Ethics',

Segmentation and Octagon Measurements